Data Loading While Session Failed

If there are 10000 records and while loading, if the session fails in between, how will you load the remaining data?

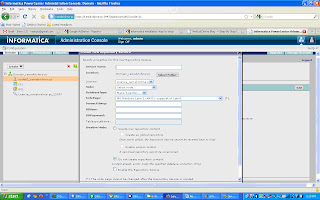

Latest Answer: Using performance recovery option in Session property.Recover session from the last commit level.RegardsPuneet ...

Session Performance

How would you improve session performance?

Latest Answer: Session performance can be improved by allocating the cache memory in a way that it can execute all the transformation within that cache size.Mainly only those transformations are considered as a bottleneck for performance which uses CACHE.Say for example:We ...

Informatica Tracking Levels

What are the Tracking levels in Informatica transformations? Which one is efficient and which one faster, and which one is best in Informatica Power Center 8.1/8.5?

Latest Answer: Also for efficient & faster depends on your requirement.If you want minimum information to be written to the session logs you can use the Terse tracing level.FromInformatica Transformation Guide:"To add a slight performance boost, you can ...

Transaction Control

Explain Why it is bad practice to place a Transaction Control transformation upstream from a SQL transformation?

Latest Answer: First Thing as mentioned in the Other Posts it drops all the incoming transaction control boundries.Besides you can use Commit and Rollback statements within SQLTransformation Script to control the transactions, thus avoiding the need for the Transaction ...

Update Strategy Transformation

Why the input pipe lines to the joiner should not contain an update strategy transformation?

Latest Answer: Update Strategy flags each row for either Insert, Update, Delete or Reject. I think when you use it before Joiner, Joiner drops all the flagging details.This is a curious question though, but I can not imagine how would one expect to deal with the scenario ...

Passive Router Transformation

Router is passive transformation, but one may argue that it is passive because in case if we use default group (only) then there is no change in number of rows. What explanation will you give?

Latest Answer: A transformation is called active if it varries the number of rows passing through it, just say total incoming rows=1000 and total outgoing rows <> 1000.in case of routertransformation count of total output rows in all the routing groups is ...

Update Strategy

In which situation do we use update strategy?

Latest Answer: We use update strategy when we need to alter the Database Operation (Insert/Update/Delete) based on the data passing through and some logic. Update Strategy alllows us to Insert (DD_INSERT) , Update (DD_UPDATE) and Delete (DD_Delete) based on logic specified ...

Unconnected Lookup

In which situation do we use unconnected lookup?

Latest Answer: The advantage of using an Unconnected Lookup is that the Lookup will be executed only when a certain condition is met and therefore improve the performance of your mapping. If the majority of your records will meet the condition, then an unconnected ...

Dependency Problems

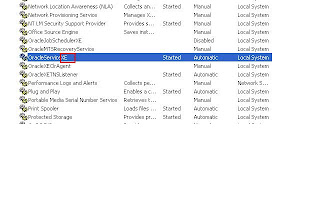

What are the possible dependency problems while running session?

Latest Answer: Dependency problems means when we run a process, the process output is input to other process. Then first process is stopped then it causes problem or stop running other process. One process is depending on other other. If one process get effected then ...

Error handling Logic

How do you handle error logic in Informatica? What are the transformations that you used while handling errors? How did you reload those error records in target?

Latest Answer: Bad files contains column indicator and row indicator.Row indicator: It generally happens when working with update strategy transformation. The writer/target rejects the rows going to the targetColumnindicator:D -valido - overflown - nullt - truncateWhen ...